1. Nvidia Unveils Revolutionary AI-Powered Chatbot Utilizing Local Storage Instead of Cloud Dependence

1. Nvidia Unveils Revolutionary AI-Powered Chatbot Utilizing Local Storage Instead of Cloud Dependence

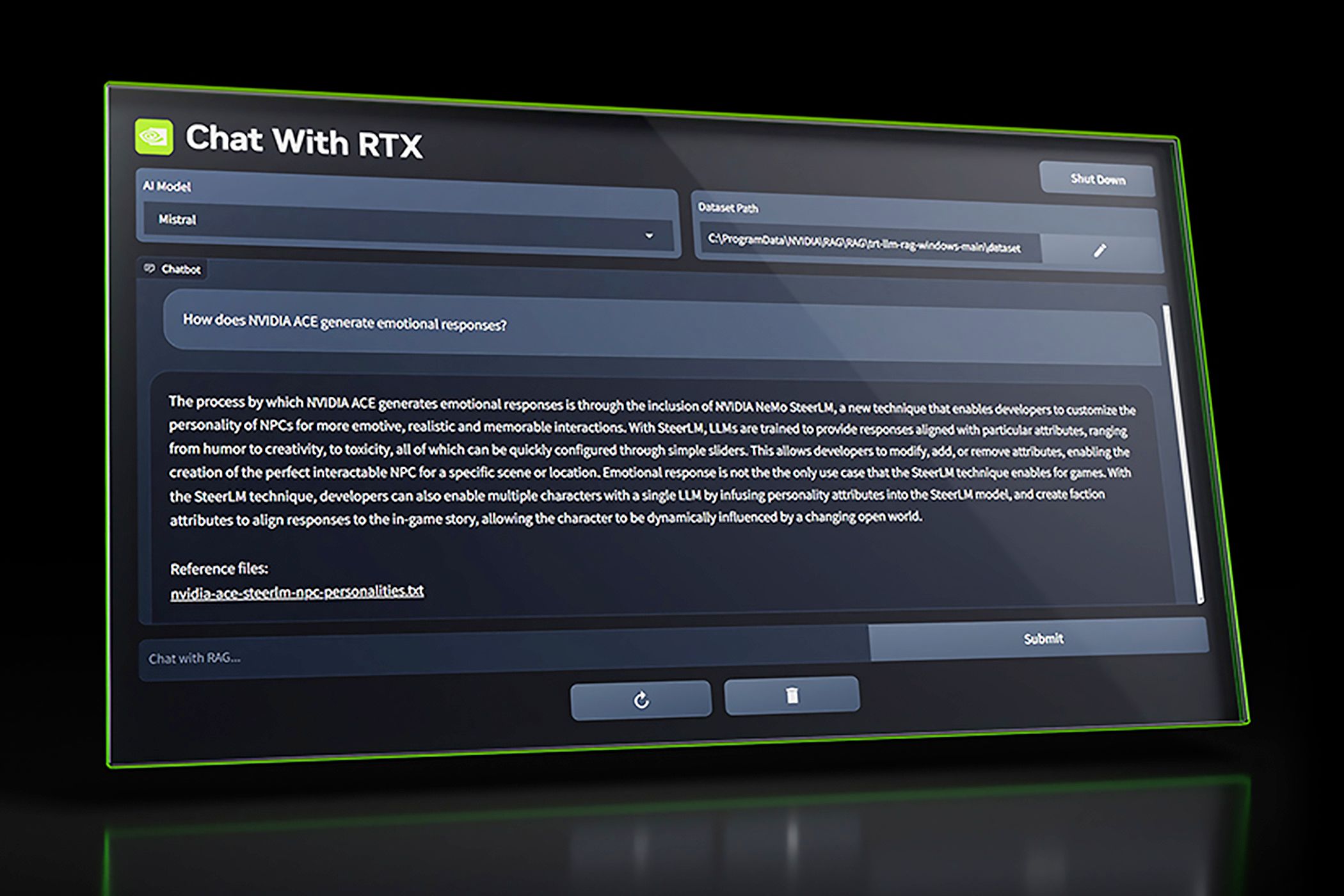

Those with compatible hardware can now install Chat With RTX , an AI chatbot that turns local files into its dataset. The Chat With RTX application is considered a “tech demo,” but it’s effective at retrieving, summarizing, and synthesizing information from text-based files.

At its core, Chat With RTX is a personal assistant that digs through your documents and notes. It saves you the trouble of manually searching through files you’ve written, downloaded, or received from others. You might ask Chat With RTX to retrieve a piece of information from a long technical document, though you can also use it to answer casual questions like, “What was the restaurant my partner recommended while in Las Vegas?”.

The personalized LLM can also pull transcripts from YouTube videos. If you want printable step-by-step instructions for a woodworking project, simply find a YouTube tutorial, copy the URL, and plug it into Chat With RTX. This works with standalone YouTube videos and playlists.

Because Chat With RTX runs locally, it produces fast results without sending your personal data into the cloud. The LLM will only scan files or folders that are selected by the user. I should note that other LLMs, including those from HuggingFace and OpenAI, can run locally. Chat With RTX is notable for two reasons—it doesn’t require any expertise, and it shows the capabilities of NVIDIA’s open-source TensorRT-LLM RAG , which developers can use to build their own AI applications.

We went hands-on with Chat With RTX at CES 2024. It’s certainly a “tech demo,” and it’s something that must be used with intention. But it’s impressive nonetheless. Even if you’re uninterested in Chat With RTX’s information-retrieval or document-summarizing capabilities, this is a great example of how locally-operated LLMs may weasel into peoples’ workflows.

And, although it isn’t mentioned on NVIDIA’s website, Chat With RTX can produce interesting responses to creative prompts. We asked the LLM to write a story based on the transcripts of a YouTube playlist, and it complied, albeit in a matter-of-fact tone. I’m excited to see how people will experiment with this application.

You can install Chat With RTX from the NVIDIA website. Note that this app requires an RTX 30-series or 40-series GPU with at least 8GB of VRAM. NVIDIA also offers a TensorRT-LLM RAG open-source reference project for those who want to build apps similar to Chat with RTX.

Source: NVIDIA

- Title: 1. Nvidia Unveils Revolutionary AI-Powered Chatbot Utilizing Local Storage Instead of Cloud Dependence

- Author: Jeffrey

- Created at : 2024-08-27 12:49:59

- Updated at : 2024-08-29 12:17:53

- Link: https://some-knowledge.techidaily.com/1-nvidia-unveils-revolutionary-ai-powered-chatbot-utilizing-local-storage-instead-of-cloud-dependence/

- License: This work is licensed under CC BY-NC-SA 4.0.